Migrating to CDN77 Object Storage

Migrating Data from S3-Compatible Storage Provider Using Rclone

Rclone is a versatile command-line tool that allows you to copy data between different cloud storage providers, including S3-compatible ones. In this guide, we'll walk through the process of using Rclone to migrate data from a S3-compatible Storage provider.

Prerequisites

Install Rclone: You can download and install Rclone from the official website (https://rclone.org/downloads/).

Migration Steps

Step 1: Configure Rclone for your Source and Target Buckets

Open your terminal or command prompt and run the following command to configure Rclone:

rclone configFollow the interactive configuration wizard:

- Choose n for a new remote.

- Give your remote a name (e.g., "s3-source").

- Select the S3-compatible storage provider you are migrating data from.

- Enter the necessary configuration details, including endpoint URL, access key, secret key, and any other provider-specific information.

Repeat the above steps to configure a remote for your target bucket (e.g., "s3-target"). When picking the s3 compatible storage type choose the Ceph Object Storage.

Step 2: Copy Data from the Source to the Target Bucket

Now that you've configured both source and target remotes, you can start copying data. Use the rclone copy command to transfer your data:

rclone copy s3-source:source-bucket-name s3-target:target-bucket-nameReplace source-bucket-name with the name of your source bucket and target-bucket-name with the name of your target bucket. This command will copy all data from the source to the target bucket.

If you want to copy only specific files or directories, you can specify them in the command. For example:

rclone copy s3-source:source-bucket-name/path/to/source-data s3-target:target-bucket-name/path/to/target-locationStep 3: Monitor the Progress of the Data Transfer

rclone copy --progress s3-source:source-bucket-name s3-target:target-bucket-nameRclone will now display progress information, including the number of files transferred and their sizes. You can monitor the progress of the data transfer in real-time.

Step 4: Verify Data in the Target Bucket

After the data transfer is complete, it's recomended to verify the integrity of the data in the target bucket. You can use rclone check for this. It compares sizes and hashes (MD5 or SHA1) and logs a report of files that don't match.

rclone check s3-source:source-bucket-name s3-target:target-bucket-nameThis command will ensure that the data in the target bucket matches the source bucket.

CDN77 Legacy Storage to Object Storage Migration Guide

Migrating using an intermediary machine

To migrate from our legacy FTP/RSYNC storage zone to a new object storage bucket you can use the Cyberduck CLI (duck) to run a "copy" operation through an intermediary server.

The following command is easy to work with (proceed with caution, it's possible to overwrite existing content)

duck --copy ftp://user_xx@push-XX.cdn77.com/www/ s3://<master_access_key>@xx-1.cdn77-storage.com/bucket-name/ --existing compare --retryThis will copy the contents of the FTP “www” directory to the specified object storage bucket.

Tmux session keeps the process running on the machine without interruption. To create a session use the following:

tmux new-session -s "name-it"

Various other tools can be used including rclone

Non-master Key Upload

Without the region master key, you cannot view, upload to, or download from any buckets on the Object storage without specifying the buckets first.

In case of s5cmd, and assuming that you have set up the .aws/credentials authentication file, the working command using user-generated keys would be:

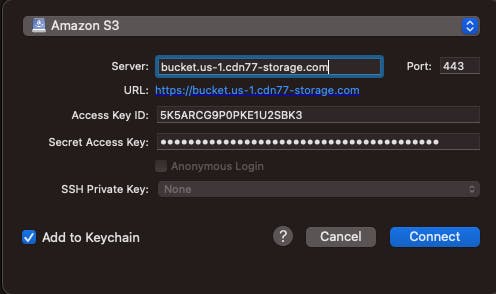

s5cmd --endpoint-url=https://<region>.cdn77-storage.com cp <file> s3://<bucket-name>/<file>Cyberduck connects to a specific bucket by defining it in the server endpoint as follows:

<bucket-name>.<region>.cdn77-storage.com

After connecting to the bucket, regardless of your key permissions, that format only allows reading from the bucket.

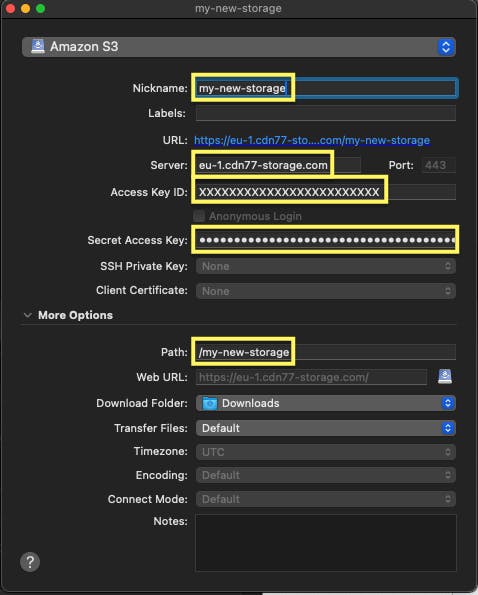

There is a functional workaround using Cyberduck Bookmarks. The bookmarks are kept in the top menu bar, in the left part of the bar.

Create a new bookmark, and specify the bucket name in the path. The config should look something like this

Please note that attempting to connect to https://us-1.cdn77-storage.com/bucket directly through Cyberduck will result in a DNS error.

Once connected, you can freely upload the content on the specified bucket, as long as your keys have set permissions to do so.

Updated on 27th November, 2024